嘗試使用了TIDB後,接著就開始讓他跟k8s做結合吧,我會介紹一次完整的TIoperator,然後配置一份TIDB deployment去使用昨天的PD進行服務串接。

首先透過helm的方式配置TIoperator

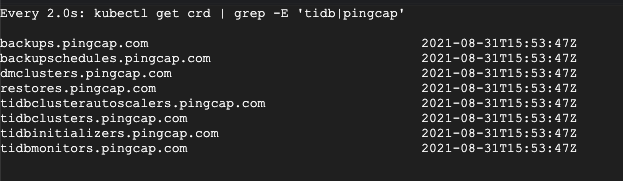

kubectl apply -f https://raw.githubusercontent.com/pingcap/tidb-operator/master/manifests/crd.yaml

helm repo add pingcap https://charts.pingcap.org/

mkdir -p ${HOME}/tidb-operator && \

helm inspect values pingcap/tidb-operator --version=${chart_version} > ${HOME}/tidb-operator/values-tidb-operator.yaml

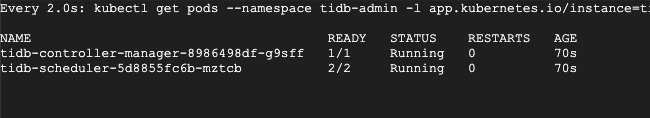

kubectl create ns tidb-admin

helm install tidb-operator pingcap/tidb-operator --namespace=tidb-admin --version=${chart_version} -f ${HOME}/tidb-operator/values-tidb-operator.yaml

這樣就可以開始透過operator佈署TIDB的元件囉,佈建tidb cluster的yaml官方有提供參數參考的yaml。

https://github.com/pingcap/tidb-operator/blob/master/examples/advanced/tidb-cluster.yaml

接著來玩看看為了配合vm配置的tidb deployment吧。

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/component: tidb

app.kubernetes.io/instance: demo

name: demo-tidb

namespace: tidb

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/component: tidb

app.kubernetes.io/instance: demo

template:

metadata:

annotations:

prometheus.io/path: /metrics

prometheus.io/port: "10080"

prometheus.io/scrape: "true"

creationTimestamp: null

labels:

app.kubernetes.io/component: tidb

app.kubernetes.io/instance: demo

spec:

affinity: {}

containers:

- command:

- sh

- -c

- touch /var/log/tidb/slowlog; tail -n0 -F /var/log/tidb/slowlog;

image: busybox:1.26.2

imagePullPolicy: IfNotPresent

name: slowlog

resources:

limits:

cpu: 100m

memory: 10Mi

requests:

cpu: 20m

memory: 5Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/log/tidb

name: slowlog

- command:

- /bin/sh

- /usr/local/bin/tidb_start_script.sh

env:

- name: CLUSTER_NAME

value: demo

- name: BINLOG_ENABLED

value: "false"

- name: SLOW_LOG_FILE

value: /var/log/tidb/slowlog

- name: POD_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.name

- name: NAMESPACE

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

image: pingcap/tidb:v5.0.1

imagePullPolicy: IfNotPresent

name: tidb

ports:

- containerPort: 4000

name: server

protocol: TCP

- containerPort: 10080

name: status

protocol: TCP

readinessProbe:

failureThreshold: 3

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

tcpSocket:

port: 4000

timeoutSeconds: 1

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /etc/podinfo

name: annotations

readOnly: true

- mountPath: /etc/tidb

name: config

readOnly: true

- mountPath: /usr/local/bin

name: startup-script

readOnly: true

- mountPath: /var/log/tidb

name: slowlog

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

volumes:

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.annotations

path: annotations

name: annotations

- configMap:

defaultMode: 420

items:

- key: config-file

path: tidb.toml

name: demo-tidb-0

name: config

- configMap:

defaultMode: 420

items:

- key: startup-script

path: tidb_start_script.sh

name: demo-tidb-0

name: startup-script

- emptyDir: {}

name: slowlog

---

apiVersion: v1

data:

config-file: |

[log]

[log.file]

max-backups = 3

startup-script: |

#!/bin/sh

# This script is used to start tidb containers in kubernetes cluster

# Use DownwardAPIVolumeFiles to store informations of the cluster:

# https://kubernetes.io/docs/tasks/inject-data-application/downward-api-volume-expose-pod-information/#the-downward-api

#

# runmode="normal/debug"

#

set -uo pipefail

ANNOTATIONS="/etc/podinfo/annotations"

if [[ ! -f "${ANNOTATIONS}" ]]

then

echo "${ANNOTATIONS} does't exist, exiting."

exit 1

fi

source ${ANNOTATIONS} 2>/dev/null

runmode=${runmode:-normal}

if [[ X${runmode} == Xdebug ]]

then

echo "entering debug mode."

tail -f /dev/null

fi

# Use HOSTNAME if POD_NAME is unset for backward compatibility.

POD_NAME=${POD_NAME:-$HOSTNAME}

ARGS="--store=tikv \

--advertise-address=${POD_IP} \

--host=0.0.0.0 \

--path=PD_IP:2379 \

--config=/etc/tidb/tidb.toml

"

if [[ X${BINLOG_ENABLED:-} == Xtrue ]]

then

ARGS="${ARGS} --enable-binlog=true"

fi

SLOW_LOG_FILE=${SLOW_LOG_FILE:-""}

if [[ ! -z "${SLOW_LOG_FILE}" ]]

then

ARGS="${ARGS} --log-slow-query=${SLOW_LOG_FILE:-}"

fi

echo "start tidb-server ..."

echo "/tidb-server ${ARGS}"

exec /tidb-server ${ARGS}

kind: ConfigMap

metadata:

labels:

app.kubernetes.io/component: tidb

app.kubernetes.io/instance: demo

name: demo-tidb-0

namespace: tidb

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: tidb

app.kubernetes.io/instance: demo

name: demo-tidb

namespace: tidb

spec:

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- name: mysql-client

port: 4000

protocol: TCP

targetPort: 4000

- name: status

port: 10080

protocol: TCP

targetPort: 10080

selector:

app.kubernetes.io/component: tidb

app.kubernetes.io/instance: demo

sessionAffinity: None

type: ClusterIP

將這個這個yaml中cm的PD_IP修改成實體PD的IP就會跟既有的PD去串接囉,在這邊提點關於PD的概念,PD雖然是分散式的管理服務,但是實際上接收請求和回應請求的其實都是leader,所以建議上PD要在可以回應所有TIDB的環境下運行。